en·tro·py

/ˈentrəpē/

Noun

- A thermodynamic quantity representing the unavailability of a system's thermal energy for conversion into mechanical work, often...

- Lack of order or predictability; gradual decline into disorder.

you can proceed to the bottom of the page for my short definition, or you could read the whole epic of a definition.

Wikipedia definition

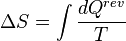

Entropy is a mathematically defined function that helps to account for the flow of heat through the Carnot cycle. In thermodynamics, it has been found to be more generally useful and it has several other definitions. Entropy was originally defined for a thermodynamically reversible process as

In statistical mechanics, the notions of order and disorder were introduced into the concept of entropy. Various thermodynamic processes now can be reduced to a description of the states of order of the initial systems, and therefore entropy becomes an expression of disorder or randomness. This is the basis of the modern microscopic interpretation of entropy in statistical mechanics, where entropy is defined as the amount of additional information needed to specify the exact physical state of a system, given its thermodynamic specification. As a result, the second law is now seen by physicists as a consequence of this new definition of entropy vis-à-vis the fundamental postulate of statistical mechanics.

Law of Entropy(summed up)-nothing will get better on its own,

Everything is in a state of Entropy, this is what is given, there is no what to be out of it. But when you step in and do what you know is right Entropy lessons.

No comments:

Post a Comment